- ISO/IEC 22989 – Artificial intelligence concepts and terminology

- ISO/IEC 5338 – AI system life cycle processes

- ISO/IEC 23894 – Artificial intelligence – Guidance on risk management

- ISO/IEC 38507 – Governance implications of the use of artificial intelligence by organizations

- ISO/IEC 42001 – Artificial intelligence – Management system

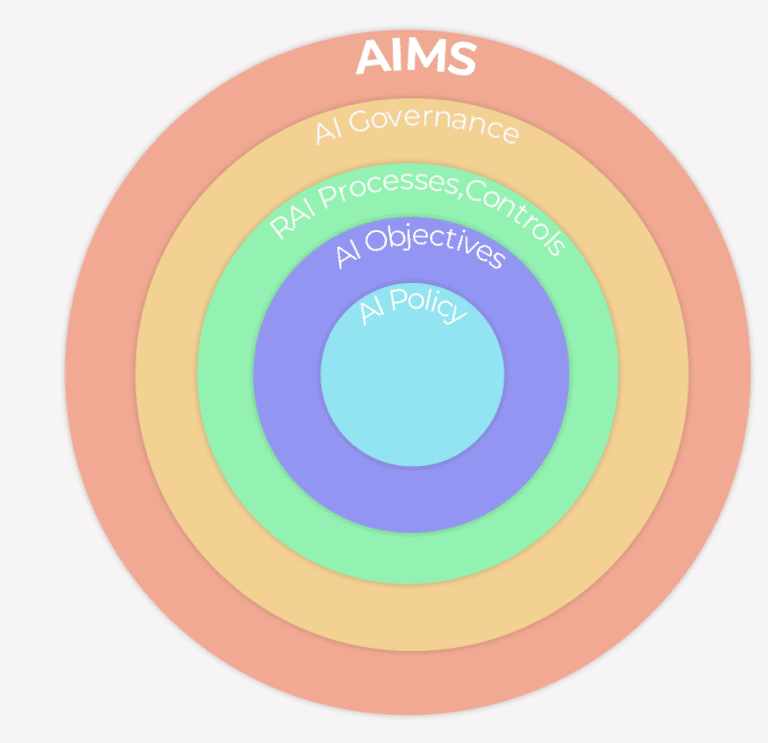

- AI policies – defines the organization’s approach to RAI, and ensures alignment with organizational values and legal requirements, everything within the scope of the AIMS must be aligned with the AI policy

- AI Objectives – measurable (if practicable) AI system-specific results that are derived from AI policies, every AI system must achieve its respective AI objectives

- RAI Processes, controls – processes that allow the AIMS to interface with the AI lifecycle, ensuring compliance with AI policies and objectives

- AI governance – includes organizational structure, internal audit, management review

With the necessary work products defined, we can apply the necessary processes and controls to prevent harm to users. To address this problem we should first start at the core of the AIMS and establish an AI policy on the topic of harm or risk of harm to users. For example:

Safety – the AI system should pose no unacceptable risk of physical or psychological harm to any individuals, groups, or societies that are involved in the development, provision, or use of AI systems.

Proceeding to the AI Objectives, we require a measurable, monitorable goal to realize this AI policy of safety. For example:

Human oversight – when performing inference, the AI system shall always have competent personnel that validate and flag incorrect model outputs, as well as make the final decision to classify the ad as conforming or in violation to policy.

RAI processes and controls are processes that allow us to implement the AI objective of human oversight. These include:

Risk management processes (preemptive) to:

- Assess the likelihood and consequences of risk

- Determine and implement a control (process to modify risk) to mitigate such risk to acceptable levels/li>

Nonconformity assessment and corrective action (reactive) to:

- Deal with the consequences of such a violation

- Determine measures to control and correct a violation of AI objectives

- Determine the root cause(s) of a violation that has occurred of the AI objectives

- Determine whether similar violations exist and correct similar nonconformities

Finally, everything is overseen by AI governance. This section is spearheaded by top management, who is responsible not only for establishing a culture of RAI but also for creating a governance structure that ensures the organization’s compliance with ISO/IEC 42001. In addition to compliance with ISO/IEC 42001, the governance structure shall also make clear what roles are accountable for any actions or consequences of the AI system.

We have done everything to establish RAI from the requirements side. But what happens when one of those requirements is broken? What are concrete examples of handling a nonconformity, treating risk, and establishing governance? At SRES we partner with clients to ensure integration of RAI at an organizational, management, and engineering level through the AIMS and technical processes. Learn more about RAI through our training aligned to the ISO/IEC 42001:2023 standard.